About

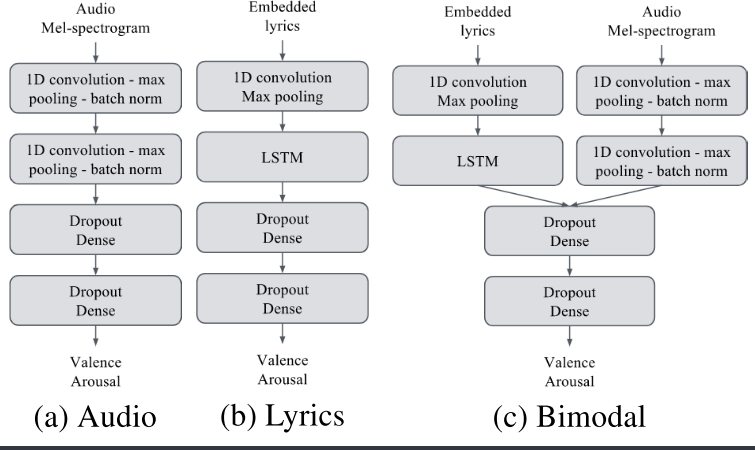

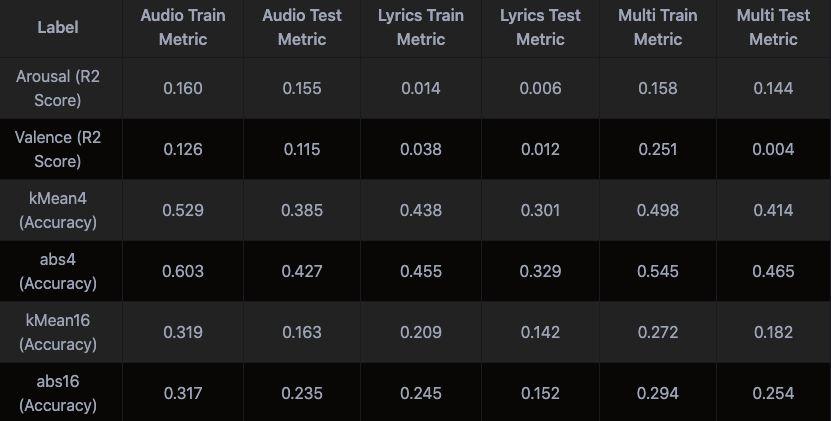

TThe baseline paper is "Music Mood Detection Based On Audio And Lyrics With Deep Neural Net". R. Delbouys et al tried to solve Music Emotion Recognition problem using two CNN layers and two dense layers using Deezer's music database and lyrics. Main contriubtion is using Multi-modal Architecture in Regression task. They used R square value to present the explanatory power of their models as performances.

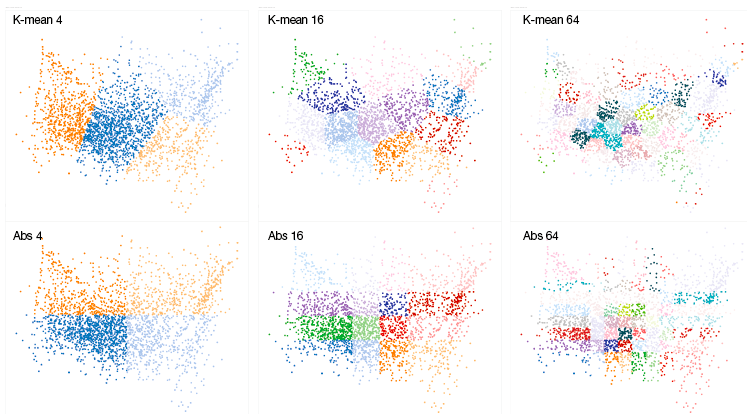

For a higher level of understanding, we changed the Music Emotion Recognition Task into a Regression task and a classification task. I proceeded with Label Clustering in two ways based on the hypothesis that humans perceived emotions as large clusters rather than specific points. As a result, Audio Only showed the best performance in Regression Task and Bi-modal showed higher effect in Classification Task.